Citation info: Mingxiao Li, Song Gao, Feng Lu, Hengcai Zhang. (2019) Reconstruction of human movement trajectories from large-scale low-frequency mobile phone data. Computers, Environment and Urban Systems, Volume 77, September 2019, 101346. DOI:

10.1016/j.compenvurbsys.2019.101346

Abstract

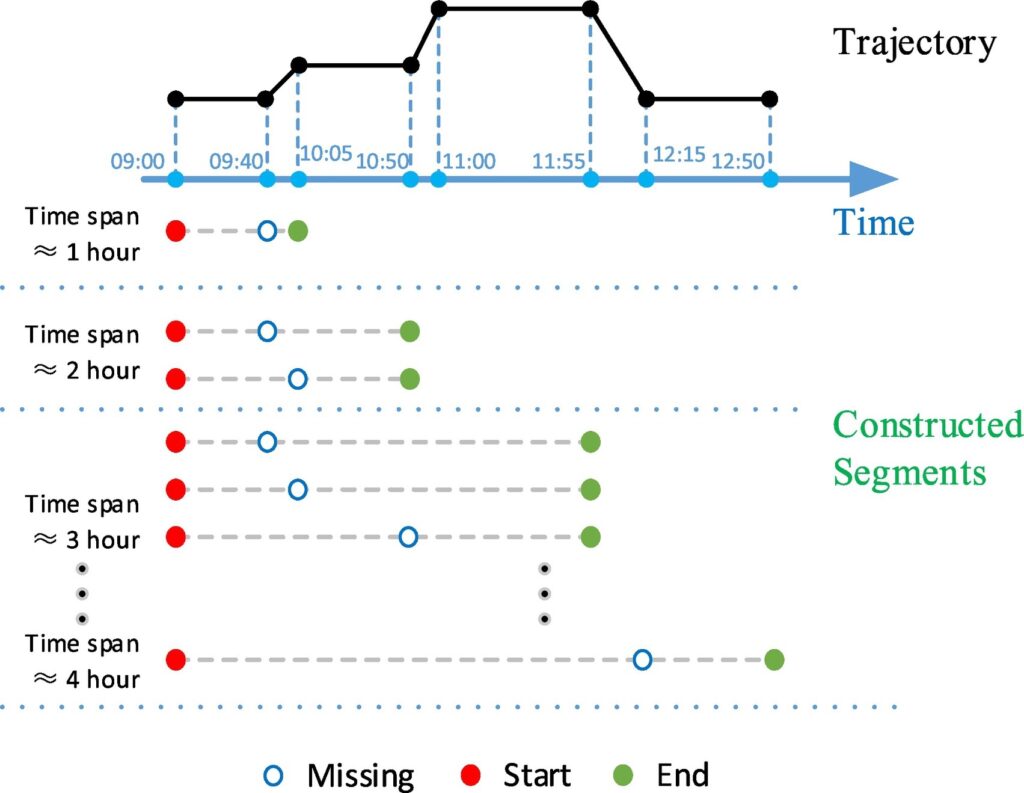

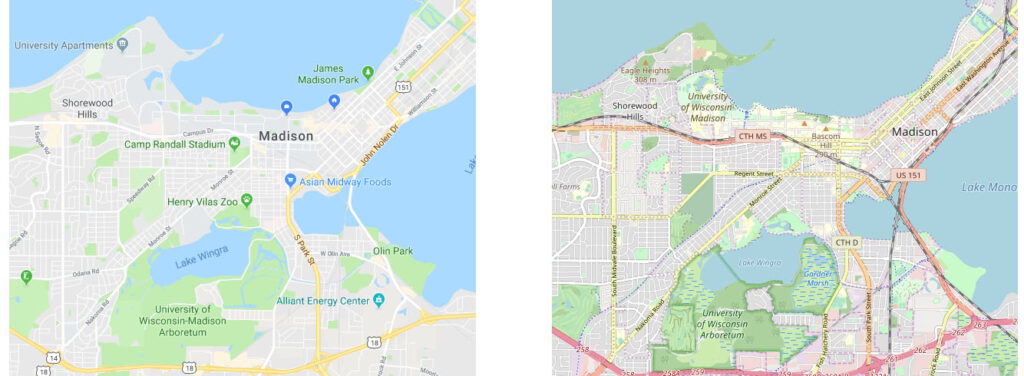

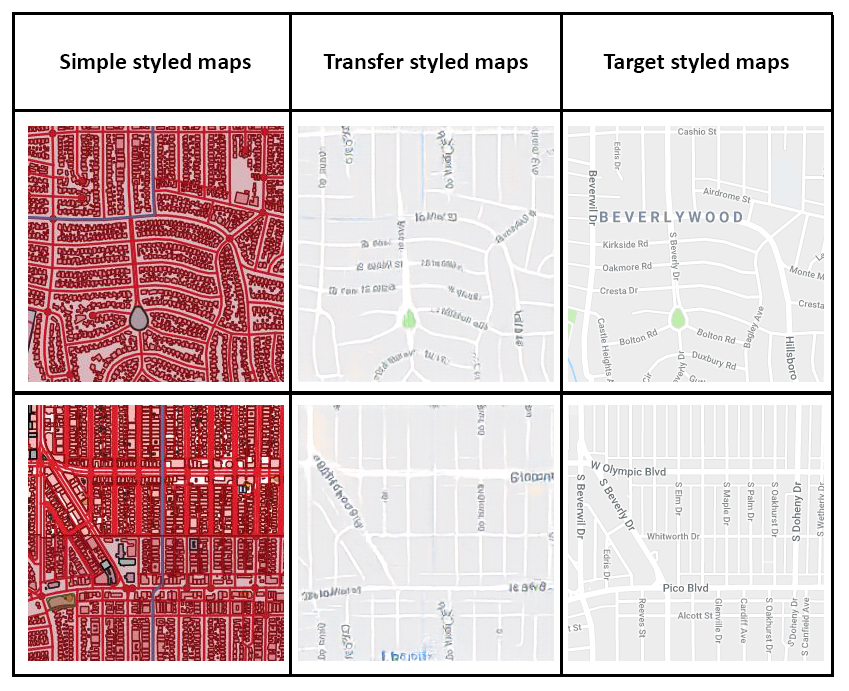

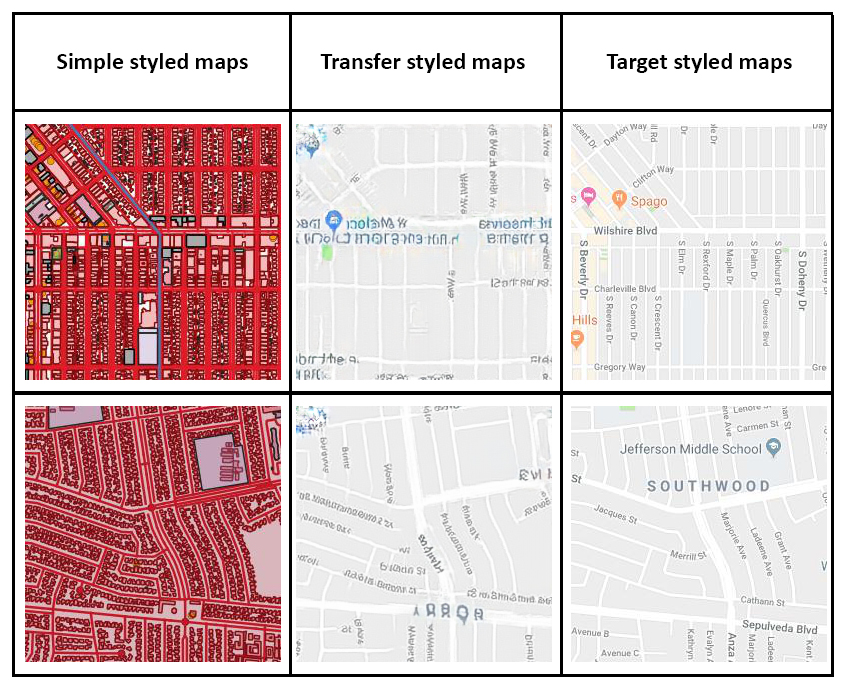

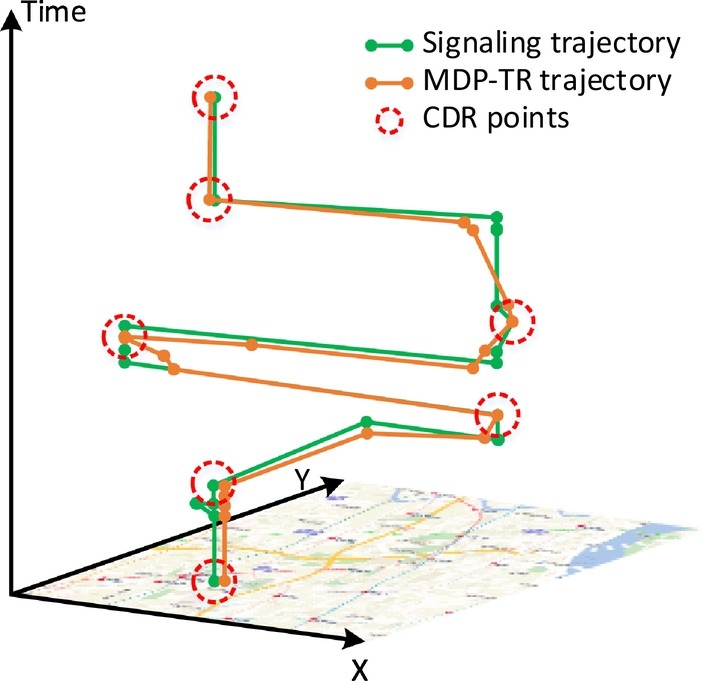

Understanding human mobility is important in many fields, such as geography, urban planning, transportation, and sociology. Due to the wide spatiotemporal coverage and low operational cost, mobile phone data have been recognized as a major resource for human mobility research. However, due to conflicts between the data sparsity problem of mobile phone data and the requirement of fine-scale solutions, trajectory reconstruction is of considerable importance. Although there have been initial studies on this problem, existing methods rarely consider the effect of similarities among individuals and the spatiotemporal patterns of missing data. To address this issue, we propose a multi-criteria data partitioning trajectory reconstruction (MDP-TR) method for large-scale mobile phone data. In the proposed method, a multi-criteria data partitioning (MDP) technique is used to measure the similarity among individuals in near real-time and investigate the spatiotemporal patterns of missing data. With this technique, the trajectory reconstruction from mobile phone data is then conducted using classic machine learning models. We verified the method using a real mobile phone dataset including 1 million individuals with over 15 million trajectories in a large city. Results indicate that the MDP-TR method outperforms competing methods in both accuracy and robustness. We argue that the MDP-TR method can be effectively utilized for grasping highly dynamic human movement status and improving the spatiotemporal resolution of human mobility research.