Our paper entitled Transferring Multiscale Map Styles Using Generative Adversarial Networks has been accepted for publishing in the International Journal of Cartography.

DOI: 10.1080/23729333.2019.1615729

Authorship: Yuhao Kang, Song Gao, Robert E. Roth.

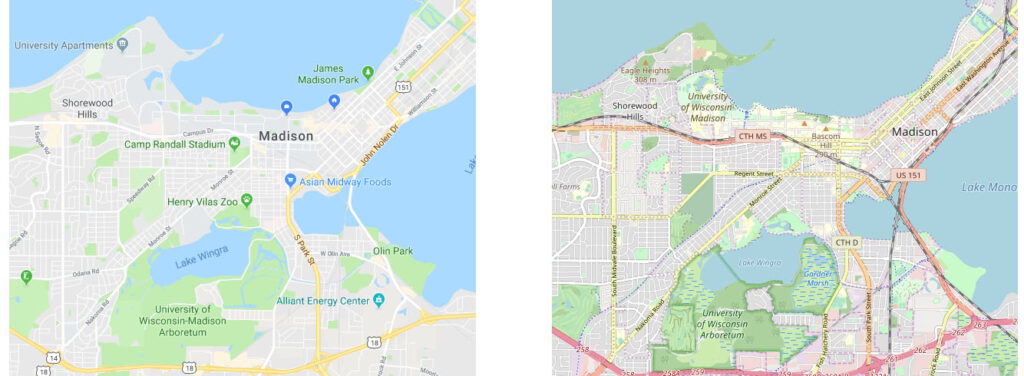

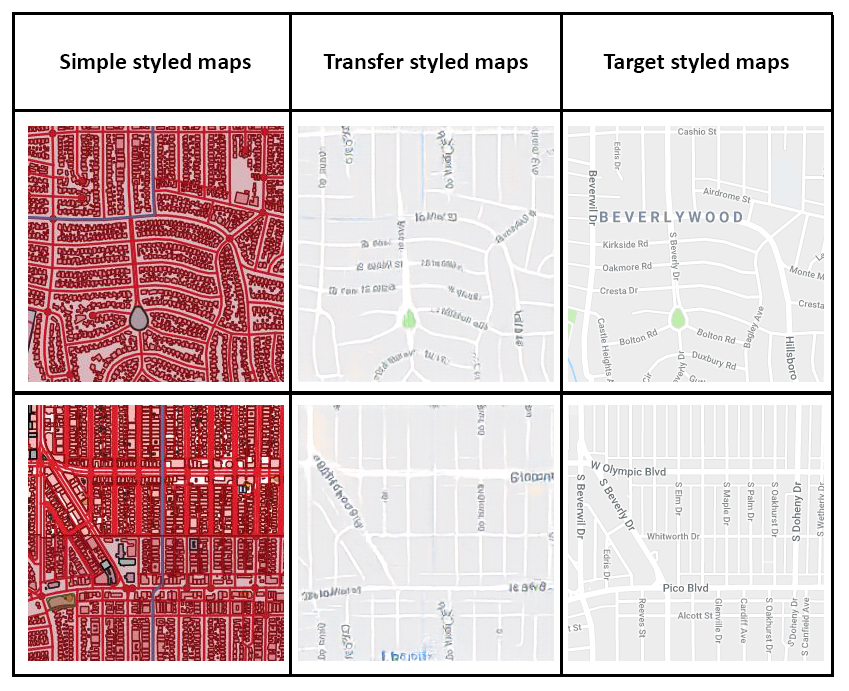

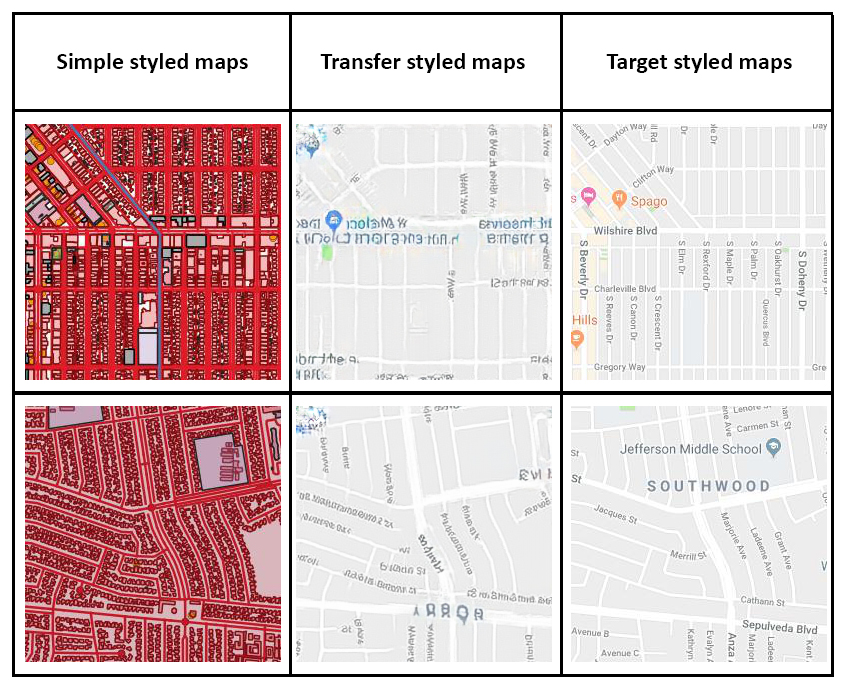

This paper proposes a methodology framework to transfer the cartographic style in different kinds of maps. By inputting the raw GIS vector data, the system can automatically render styles to the input data with target map styles but without CartoCSS or Mapbox GL style specification sheets. The Generative Adversarial Networks (GANs) are used in this research. The study explores the potential of implementing artificial intelligence in cartography in the era of GeoAI.

We outline several important directions for the use of AI in cartography moving forward. First, our use of GANs can be extended to other mapping contexts to help cartographers deconstruct the most salient stylistic elements that constitute the unique look and feel of existing designs, using this information to improve design in future iterations. This research also can help nonexperts who lack professional cartographic knowledge and experience to generate reasonable cartographic style sheet templates based on inspiration maps or visual art. Finally, integration of AI with cartographic design may automate part of the generalization process, a particularly promising avenue given the difficult of updating high resolution datasets and rendering new tilesets to support the ’map of everywhere’.

Here is the abstract:

The advancement of the Artificial Intelligence (AI) technologies makes it possible to learn stylistic design criteria from existing maps or other visual arts and transfer these styles to make new digital maps. In this paper, we propose a novel framework using AI for map style transfer applicable across multiple map scales. Specifically, we identify and transfer the stylistic elements from a target group of visual examples, including Google Maps, OpenStreetMap, and artistic paintings, to unstylized GIS vector data through two generative adversarial network (GAN) models. We then train a binary classifier based on a deep convolutional neural network to evaluate whether the transfer styled map images preserve the original map design characteristics. Our experiment results show that GANs have a great potential for multiscale map style transferring, but many challenges remain requiring future research.

You can also visit the following links to see some of the trained results:

CycleGAN at zoom level 15: https://geods.geography.wisc.edu/style_transfer/cyclegan15/

CycleGAN at zoom level 18: https://geods.geography.wisc.edu/style_transfer/cyclegan18/

Pix2Pix at zoom level 15: https://geods.geography.wisc.edu/style_transfer/pix2pix15/

Pix2Pix at zoom level 18: https://geods.geography.wisc.edu/style_transfer/pix2pix18/

Dataset available (Only simple styled maps are available, while target styled maps are not available because of the copyright from Google):